Software testing is a loser's bet

[Chinese (zh-cn) translation of this post is available at http://www.labazhou.net/2015/03/software-testing-is-a-loser-bet/]

While reading this blog post, remember that testing does not aim to improve quality. The only way to improve quality is to change the product. And testing does not change it.

The goal for testing is to gather information about the product. This information of course can be used while improving the product.

By software testing I mean the checking we test engineers do during the development phase of a product, and in some cases after the development phase. Can admins create new users? Will autosync recover from connection problems? Does this input field accept multibyte UTF-8 characters? and similar things.

And maybe more importantly by software testing I do not mean unit tests, usability tests, performance tests, acceptance tests or any other non-functional testing.

Checking was relevant in the 90's

The current model of test engineers doing lots of checking during the development phase and not doing much else is an artifact from the early days. Days before Internet won every battle there was.

Before everything was a web page and before all our devices had constant Internet access, the only way to gather information about the product was to do testing. There weren't server logs, crash reports and UI metrics to look at.

Back in the days you got one build a week if you were lucky. The first viable unit testing framework was born in '98 (SUnit, the first of xUnit family) so "if it compiles, it's good" was the norm.

The only way to gather any information about the product was to do a lot of checking.

Back to the present

Today things are totally different.

Soon all our devices have constant Internet access. And if we skip operating systems and web browsers, I would guess that majority of the software we consume is actually running on the machines that the makers of the software owns. I'm talking about web pages here.

So already most of the software is running on such machines, that the developers can get really detailed usage data, error reports and all that nice logging information. All this information is something that was not available 20 years ago.

And of course the same channel that we use to get those logs and reports, can be used to ship and update the software.

20 years ago when you shipped a software product, you printed it on CD's, put it in cardboard boxes and you literally shipped the product to somewhere. And you had no idea who actually used the software, because it was bought from real stores and you did not sell it. Some retailer handled the transaction.

So you had no idea whether the software actually worked at all on the customers machine. And even if you knew that some feature is hard to use or does not work in certain conditions, you had no way to update the software. Because you did not know who used your product.

When the surrounding reality changes, we need to rethink how we use our resources. Back in the days the only sensible thing to do was to be as sure as possible that no bugs were shipped because it was close to impossible to fix those and your software could not report home if there were any kind of problems.

Now when that reasoning is out of the picture, is it still the smartest strategy to do as much checking before shipping as humanly possible? In my opinion it is not.

In my opinion today we should focus on making sure that the initial code quality is as good as possible, that the vision of the product is actually something our customers want and that we are actually following the vision. And we should monitor the usage of the product as much as possible. And in order to react to the data our product reports back to us, we must make sure that the product is easy to modify and maintain.

Then what?

Instead of wasting all day checking stuff, we should help developers write better code. We should advocate for better development practices, we should teach them how to use continuous integration systems, better version control systems, unit testing, code review, static analysis and other best practices.

We should help product owners to test their ideas by building paper prototypes and doing hallway usability studies. We should ensure that the vision is actually implemented.

We should write small programs that automatically verify the functionality of core components of the product. I intentionally avoided test automation here, because I want to emphasize the type of skill is needed.

We should do our best that the initial version of the product is as good as possible and that it is what was actually ordered. And further development of the product should be as easy as possible.

Why?

When the initial code quality is better, it is easier to continue working on the product. When there are automated test cases that verify the functionality of the core components, it is way safer to continue working on the product. When build processes and version control is in a good shape, it is easier to continue working on the product. Same thing with peer reviewed and unit tested code and so on. When we make sure that the code quality is in such a shape that changing and fixing the product is easier, we can more easily react to changes. Better code quality means that bugs are easier to fix, new developers can take on the product in shorter time and so on.

We should drop the noble idea of shipping heavily tested product, and instead focus on shipping a product that can be changed as easily as possible.

By helping product owners verify their ideas we can find our failures earlier. And when we make sure that the ideas are actually implemented, we actually get the product that was ordered.

TL;DR

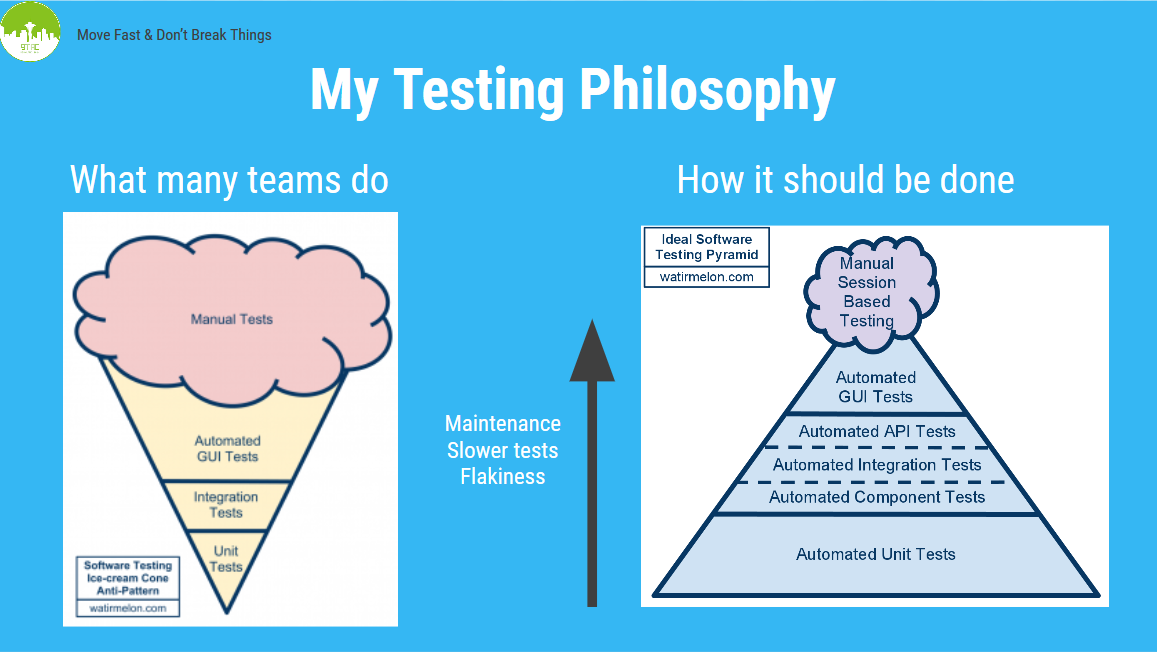

Here's a nice slide from GTAC 2014 to summarize most of this: